The Productivity Boom Has Created a Data Security Blind Spot

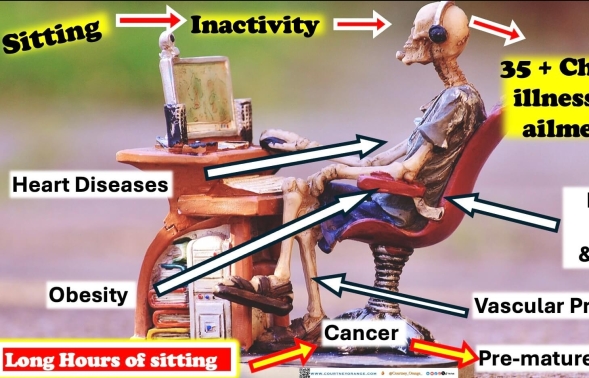

In the rush to adopt AI, many businesses overlooked the risks of sharing confidential data. Employees often paste client financials, employee details, or sensitive meeting content into AI tools to get faster results. While the output is impressive, the data may now be stored or processed in ways that are not secure or compliant.

Most generative AI tools were not built with business security in mind. Some store user inputs, others use them to train their models, and many do not meet data residency or compliance standards. This creates a blind spot for IT and compliance teams.

Generative AI Chatbots Remember More Than They Should

Popular tools like ChatGPT, Claude, and Gemini are easy to use but not always secure. Unless you are using an enterprise-grade version with the right settings, any data entered could be stored and used to improve the model. This means confidential information might end up in systems outside your control.

The bigger issue is that many employees use these tools without formal approval. This leaves organisations exposed to risks they may not even be aware of.

AI Meeting Tools Raise New Compliance Concerns

AI meeting tools like Otter.ai and Fathom offer great functionality. They transcribe conversations, capture action items, and save time. But they also raise serious compliance concerns.

Some tools record meetings automatically or with only one party’s consent. This becomes problematic in regulated industries or when international stakeholders are involved. Key questions to ask include:

Were all participants informed, and did they consent?

Where are the recordings stored?

Who has access to them?

Are they encrypted and compliant with regulations like GDPR or HIPAA?

These tools are often adopted without a full review, which increases the risk.

Securing Microsoft Copilot for Enterprise Use

Microsoft Copilot stands out because it was designed with enterprise security in mind. It operates within the Microsoft 365 environment, using your organisation’s existing identity, access, and compliance controls. Your data stays within your Microsoft tenant and is not used to train external models.

However, Copilot reflects your internal access policies. If those are too relaxed, users might see data they should not. Before rolling out Copilot, businesses should audit permissions, adjust security groups, and educate teams on proper use.

Ready to Embrace AI Securely?

If your team is already experimenting with AI or you are considering deploying Microsoft Copilot or similar tools, now is the time to ensure it is done right.

Talk to Extech Cloud about how we can help you adopt AI securely and strategically. We will help you identify what is safe, what is not, and how to make AI work for your business.

Contact us today to start your secure AI journey.